Navigating AI-Related Risks: A Guide for Directors and Officers

As AI evolves, directors and officers must maneuver through a complex landscape of regulatory and legal risks. Implementing best practices around the use of AI and robust governance-focused risk mitigation can help manage exposures.

Key Takeaways

-

While AI has opened the door to efficiencies in business operations and offered new ways of working, directors and officers must tread carefully when implementing the technology and externally communicating its use.

-

It is crucial for directors and officers to comply with evolving AI regulations, making sure that transparency and consumer rights underpin governance frameworks.

-

Effective risk management starts at the earliest stages of AI use and must be reinforced with consistent re-evaluation.

Artificial intelligence (AI) will likely become the next big driver of increased earnings volatility for global organizations and emerge as a top 20 risk in the next three years, according to Aon's Global Risk Management Survey. Already, almost 40 percent of S&P 500 companies have mentioned AI in their most recent annual reports filed with the U.S. Securities and Exchange Commission (SEC). Those that cited AI on Q1 2024 earnings calls have seen a better average stock price performance over the past 12 months compared to the S&P 500 companies that did not.1

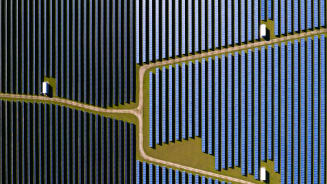

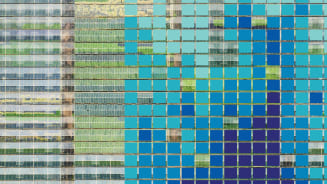

Key Industries Mentioning AI on Earnings Calls

Key ways in which AI is creating opportunities for organizations include:

- Empowering workforces to be more productive, personalizing processes and providing training in new and engaging ways. Employees are looking for organizations to deploy AI technologies in ways that enrich the workplace experience.

- Assessing which tasks could be augmented by AI, given the kinds of work that people do today. The goal is to reduce manual, redundant work and create additional capacity for employees to spend time on critical thinking.

- Elevating and streamlining business processes using AI. Companies can use technology to review supplier contracts, make the process more efficient and help internal committees digest large amounts of information when making decisions.

While AI implementation can help accelerate some business functions, directors and officers are likewise becoming exposed to risks associated with the technology’s use. In this environment, directors and officers (D&O) insurance is a key risk management tool to help manage AI-related risks, alongside robust risk management strategies.

Here are three AI-related risks and corresponding guidance for directors and officers to manage exposures.

Webinar

AI Governance and Risk Management Best Practices

Risk #1: AI Washing Lawsuits

AI washing refers to the practice of companies falsely touting their use of AI. Some companies have claimed to use AI when they are actually using less sophisticated computing technologies. Others have overstated the efficacy of their AI over existing techniques or suggested that their AI solutions are fully operational when that is not the case.2

The recent Innodata lawsuit in the U.S. demonstrates the risk that companies making AI disclosures and their respective directors and officers could face, becoming potential targets for the plaintiffs’ bar should they make false or misleading claims about AI capabilities.3

Additionally, in March 2024, the U.S. Securities and Exchange Commission (SEC) charged two investment advisors with making false and misleading statements about their use of AI. The advisors agreed to pay a collective $400,000 in civil penalties. This action is consistent with SEC Chair Gary Gensler’s warning of “war without quarter” against companies and their directors and officers who raise money from the public without being “truthful about [the company’s] use of AI and associated risk.”4 In fact, the majority of filed AI securities class action suits are based on companies making allegedly misleading statements around the use or impact of AI, according to Aon research.

Many of these incidents involve firms touting AI capabilities. Later, it's allegedly revealed that the AI is not as developed as articulated. The consequence could be the stock falling or shareholders filing claims under the Securities Exchange Act.

The Bottom Line:

Directors and officers should consider how to substantiate any AI claims their company makes. “They need to be aware of the risks when the company makes a claim about AI being a key enabler to the organization’s profitability or efficiency, or when referencing solutions offered to customers that have an AI-related enablement,” explains Katie Hill, a director in Aon’s Talent Solutions practice in the U.S. If an organization is disclosing how it is using AI as part of its business strategy, directors and officers should expect to provide evidence to verify the claim.

There is no one-size-fits-all answer for companies on what that proof point will be. They may need to state that the company has seen a related percentage increase in revenue directly related to AI or demonstrate the number of efficiencies gained as a result of its use. Transparency in disclosures and stakeholder discussions around the extent to which a company is exploring AI’s applications and benefits are key to mitigating risks.

Risk #2: Regulatory Exposures

AI adoption in various business operations, particularly in employment practices, has sparked both interest and regulation by legislators worldwide. Two notable evolving AI-related regulations include the EU AI Act and Colorado AI Act. Transparency requirements are key to the EU regulation, while the Colorado law is introducing third-party and supplier risks, as companies deploying AI systems are also responsible for protecting consumers from any known or foreseeable risks of discrimination involving the technology.

-

EU AI Act

The new regulatory framework establishes various obligations for providers and users depending on the level of riskiness of the artificial intelligence systems at issue. Many AI systems pose minimal risk, but they need to be assessed.5

“While the EU AI Act is still being phased in and there has not yet been enforcement activity under the new Act, the prospect of liability will be a real concern as it has been written so broadly,” says Nick Reider, senior vice president and deputy D&O product leader at Aon. “In turn, there will be a significant focus among directors and officers on internal governance and internal reporting to senior management and boards of directors.” -

Colorado AI Act

The new law is the first comprehensive legislation in the U.S. targeting high-risk AI systems. The law requires that both developers and entities that deploy high-risk AI systems use reasonable care to prevent algorithmic discrimination. It also presents a rebuttable presumption that reasonable care was used if they meet certain requirements and publicly disclose certain information about high-risk AI systems.6

Other regulations around the world already address these risks, including Canada’s Algorithmic Accountability Act (Bill C-27), Australia’s AI Ethics Framework released in 2019 and Article 22 of the European Union’s General Data Protection Regulation (GDPR), which includes specific provisions related to automated decision making and profiling.

U.S. state-level regulations focused on this issue include the Illinois AI Video Interviewing Act (2020), New York's Automated Employment Decision Tool Law (AEDT, 2023) and Washington, D.C.’s Stop Discrimination by Algorithms Act (2023). U.S. states with emerging legislation on automated employee decision making related to surveillance and monitoring include Maine, New York, Vermont and Massachusetts. The White House has also created its Blueprint for an AI Bill of Rights that will likely serve as a framework for state and federal legislation.

The Bottom Line:

As regulations develop, directors and officers must ensure that risk mitigation strategies focus on overseeing the use of automated tools in business operations. Organizations should first assess where the risk resides, which is determined to a certain degree by what kind of AI tool they’re using and how it is being used. Then, leaders need to survey the regulatory landscape to identify the rules and regulations that apply to their business, before preparing messaging and disclosures for regulatory bodies on the application of these tools.

Organizations using AI should also develop a skillset internally to select the best tools to inform workforce decisions and rigorously audit their impact. “These are skills that many organizations still need to develop,” says Marinus van Driel, a partner in Aon’s Workforce Transformation Advisory team. “They might not have that capability in-house because of a lack of knowledge and because the regulatory environment is unfolding in real time.”

Risk #3: Shareholder Derivative Lawsuits and Additional Exposures

Besides securities class actions and regulatory claims, directors and officers face the risk of shareholder derivative claims alleging malfeasance with respect to corporate implementation and use of AI.

Derivative lawsuits give corporate shareholders a platform to bring claims on behalf of the corporation and against the corporation’s directors and officers for a wide range of alleged misconduct, subject to specific procedural requirements. In the past decade, derivative settlements have involved increasingly large monetary components — millions, and sometimes even hundreds of millions, of dollars.

Corporate misuse of AI has the potential to develop into a variety of unfavorable outcomes and liabilities arising from intellectual property infringement claims, privacy claims, and defamation or similar tort claims.

The specter of such potential liability for corporate officers with day-to-day management responsibilities is particularly acute considering the recent case law in Delaware confirming for the first time that officers — and not just directors — may face non-exculpable liability for failing to carry out their oversight obligations in breach of their fiduciary duty of loyalty to the company.

Other AI-related exposures impacting directors and officers include:

- Product liability and breach of contract claims, including failure to ensure that an AI product that caused harm was free from defects.

- Competition claims that arise if AI was used to recommend transactions in price-sensitive securities or to set the price of goods or services sold by a business. Boards must make sure that the AI is not relying on inside information or causing the company to coordinate its prices with competitors in an anti-competitive manner.

The Bottom Line:

Given the non-indemnifiable nature of what are often substantial monetary settlements in derivative lawsuits, Side A D&O insurance — which provides first-dollar coverage to directors and officers for non-indemnifiable loss — is critical for protecting individual directors’ and officers’ personal assets when derivative lawsuit settlements are negotiated and ultimately funded. Without Side A coverage, directors and officers may have to pay derivative settlements or judgments out of pocket.

Strategies to Consider for a Successful AI Approach

Companies implementing and using AI are encouraged to audit their D&O policies regularly to ensure their coverage is appropriately tailored. They should aim to look for solutions that can enhance AI-related D&O coverage. Augmenting definitions of key terms, such as “loss” and “insured person,” and enhancing typical exclusionary language can optimize coverage for AI-related exposures.

Organizations should also be prepared to address their reliance on AI, financial or other relevant prospects based on AI, the corporate governance and oversight of AI, and vetting of disclosures related to AI in D&O underwriting meetings.

As an overarching risk management strategy, AI governance should start at the earliest stages of development and be reinforced with constant evaluation. This approach applies regardless of whether companies are using predictive or generative AI tools.

Directors and officers can consider:

- Who is leading AI as a risk function and as an opportunity for operational efficiencies and improvements? How does this impact what the company sells to clients?

- How are externally sensitive company data assets protected from AI being used by third parties or in the public domain?

- Does the company have a formal AI policy for employees? Are all employees aware of it? How does the company assess compliance with its formal AI policy?

- Does the company proactively and publicly disclose its AI practices and policies? If so, is it mentioned in earnings reports and calls, regulatory filings or supplier agreements?

Implementing AI cannot be a check-the-box exercise. Firms should have a roadmap to know where they’re going and why they’re using the technology, tracking progress against the plan and adjusting as needed.

As AI will likely remain front and center, directors and officers should be well-equipped to speak to the organization's implementation and use of the technology. Leaders should also monitor and apply lessons from regulatory fines and litigation as the landscape continues to evolve.

1 Highest Number of S&P 500 Companies Citing “AI” on Earnings Calls Over Past 10 Years |

FactSet

2 What is 'AI washing' and why is it a problem? | BBC News

3 Innodata Investor Sues Over ‘Rudimentary’ AI Offerings, Services | Bloomberg Law

4 AI, Finance, Movies, and the Law - Prepared Remarks Before the Yale Law School | SEC

5 EU AI Act: First regulation on artificial intelligence | European Parliament

6 Consumer

Protections for Artificial Intelligence | Colorado General Assembly

General Disclaimer

This document is not intended to address any specific situation or to provide legal, regulatory, financial, or other advice. While care has been taken in the production of this document, Aon does not warrant, represent or guarantee the accuracy, adequacy, completeness or fitness for any purpose of the document or any part of it and can accept no liability for any loss incurred in any way by any person who may rely on it. Any recipient shall be responsible for the use to which it puts this document. This document has been compiled using information available to us up to its date of publication and is subject to any qualifications made in the document.

Terms of Use

The contents herein may not be reproduced, reused, reprinted or redistributed without the expressed written consent of Aon, unless otherwise authorized by Aon. To use information contained herein, please write to our team.

Aon's Better Being Podcast

Our Better Being podcast series, hosted by Aon Chief Wellbeing Officer Rachel Fellowes, explores wellbeing strategies and resilience. This season we cover human sustainability, kindness in the workplace, how to measure wellbeing, managing grief and more.

Aon Insights Series Asia

Expert Views on Today's Risk Capital and Human Capital Issues

Aon Insights Series Pacific

Expert Views on Today's Risk Capital and Human Capital Issues

Aon Insights Series UK

Expert Views on Today's Risk Capital and Human Capital Issues

Construction and Infrastructure

The construction industry is under pressure from interconnected risks and notable macroeconomic developments. Learn how your organization can benefit from construction insurance and risk management.

Cyber Labs

Stay in the loop on today's most pressing cyber security matters.

Cyber Resilience

Our Cyber Resilience collection gives you access to Aon’s latest insights on the evolving landscape of cyber threats and risk mitigation measures. Reach out to our experts to discuss how to make the right decisions to strengthen your organization’s cyber resilience.

Employee Wellbeing

Our Employee Wellbeing collection gives you access to the latest insights from Aon's human capital team. You can also reach out to the team at any time for assistance with your employee wellbeing needs.

Environmental, Social and Governance Insights

Explore Aon's latest environmental social and governance (ESG) insights.

Q4 2023 Global Insurance Market Insights

Our Global Insurance Market Insights highlight insurance market trends across pricing, capacity, underwriting, limits, deductibles and coverages.

Regional Results

How do the top risks on business leaders’ minds differ by region and how can these risks be mitigated? Explore the regional results to learn more.

Human Capital Analytics

Our Human Capital Analytics collection gives you access to the latest insights from Aon's human capital team. Contact us to learn how Aon’s analytics capabilities helps organizations make better workforce decisions.

Insights for HR

Explore our hand-picked insights for human resources professionals.

Workforce

Our Workforce Collection provides access to the latest insights from Aon’s Human Capital team on topics ranging from health and benefits, retirement and talent practices. You can reach out to our team at any time to learn how we can help address emerging workforce challenges.

Mergers and Acquisitions

Our Mergers and Acquisitions (M&A) collection gives you access to the latest insights from Aon's thought leaders to help dealmakers make better decisions. Explore our latest insights and reach out to the team at any time for assistance with transaction challenges and opportunities.

Navigating Volatility

How do businesses navigate their way through new forms of volatility and make decisions that protect and grow their organizations?

Parametric Insurance

Our Parametric Insurance Collection provides ways your organization can benefit from this simple, straightforward and fast-paying risk transfer solution. Reach out to learn how we can help you make better decisions to manage your catastrophe exposures and near-term volatility.

Pay Transparency and Equity

Our Pay Transparency and Equity collection gives you access to the latest insights from Aon's human capital team on topics ranging from pay equity to diversity, equity and inclusion. Contact us to learn how we can help your organization address these issues.

Property Risk Management

Forecasters are predicting an extremely active 2024 Atlantic hurricane season. Take measures to build resilience to mitigate risk for hurricane-prone properties.

Technology

Our Technology Collection provides access to the latest insights from Aon's thought leaders on navigating the evolving risks and opportunities of technology. Reach out to the team to learn how we can help you use technology to make better decisions for the future.

Top 10 Global Risks

Trade, technology, weather and workforce stability are the central forces in today’s risk landscape.

Trade

Our Trade Collection gives you access to the latest insights from Aon's thought leaders on navigating the evolving risks and opportunities for international business. Reach out to our team to understand how to make better decisions around macro trends and why they matter to businesses.

Weather

With a changing climate, organizations in all sectors will need to protect their people and physical assets, reduce their carbon footprint, and invest in new solutions to thrive. Our Weather Collection provides you with critical insights to be prepared.

Workforce Resilience

Our Workforce Resilience collection gives you access to the latest insights from Aon's Human Capital team. You can reach out to the team at any time for questions about how we can assess gaps and help build a more resilience workforce.

More Like This

-

Article 4 mins

5 Steps for Successful Carbon Accounting Verification

Organizations can demonstrate their commitment to global sustainability and a low-carbon future by addressing verification challenges and adopting best practices.

-

Report 13 mins

A Workforce in Transition Prepares to Meet a Host of Challenges

Engaging a changing workforce requires data and innovation. Workers increasingly expect more than just a paycheck. In response, organizations are balancing costs with the ability to provide a compelling employee experience.

-

Article 15 mins

Management Liability Insurance Market in 2025: Stability Amid Evolving Risks

Market stability prevails in management liability lines as insurers continue to seek market share. However, expanding technologies, increased litigation and macroeconomic factors are causing growing uncertainty and underwriting concerns.